Before jumping into the High availability it would be a really good if all of readers can sit on the same seat about the clustering technology as well. Recently enough I went through the history of the clustering to get and idea about it, interestingly enough there are lot more than meets the eye on clustering :) Some history info about clustering can be found over here

What is clustering - In its most elementary definition, a server cluster is at least two independent computers that are logically and sometimes physically joined and presented to a network as a single host. That is to say, although each computer (called a node) in a cluster has its own resources, such as CPUs, RAM, hard drives, network cards, etc., the cluster as such is advertised to the network as a single host name with a single Internet Protocol (IP) address. As far as network users are concerned, the cluster is a single server, not a rack of two, four, eight or however many nodes comprise the cluster resource group.

Why cluster - Availability: Avoids problems resulting from systems failures.

Scalability: Additional systems can be added as needs increase.

Lower Cost: Supercomputer power at commodity prices.

What are the cluster types -

- Distributed Processing Clusters

- Used to increase the speed of large computational tasks

- Tasks are broken down and worked on by many small systems rather than one large

- system (parallel processing).

- Often deployed for tasks previously handled only by supercomputers.

- Used for scientific or financial analysis.

- Failover Clusters

- Used to increase the availability and serviceability of network services.

- A given application runs on only one of the nodes, but each node can run one or more applications.

- Each node or application has a unique identity visible to the “outside world.”

- When an application or node fails, its services are migrated to another node.

- The identity of the failed node is also migrated.

- Works with most applications as long as they are scriptable.

- Used for database servers, mail servers or file servers.

- High Availability Load Balancing Clusters

- Used to increase the availability, serviceability and scalability of network services.

- A given application runs on all of the nodes and a given node can host multiple applications.

- The “outside world” interacts with the cluster and individual nodes are “hidden.”

- Large cluster pools are supported.

- When a node or service fails, it is removed from the cluster. No failover is necessary.

- Applications do not need to be specialized, but HA clustering works best with stateless applications that can be run concurrently.

- Systems do not need to be homogeneous.

- Used for web servers, mail servers or FTP servers.

Now coming back into the Microsoft clustering clustering goes back to good old NT 4.0 era with the code name “wolf pack” All this time it came all the way step by step growing and shine on Windows 2000 period giving the confidence for customers on the stability of the Microsoft clustering technology. If there are filed engineers who have configured the Windows 2003 clustering will know the painful steps they have to take to configure the clustering Steps are very lengthy. When it comes to Windows 2003 R2 Microsoft offered various tools and wizards to make the clustering process less painful process to for engineers. If you’re planning to configure windows 2003 clustering one place you definitely look into is this site.

Now we’re in the windows 2008 era and clust4ering has been improved dramatically in the configuration side and as well as in the stability wise. New names for the clustering goes as “Windows failover clustering”

As I have been updating the audience in public sessions clustering is no longer going to be a technology focus by Enterprise market. Clustering can be utilized by SMB and SME market as well with a fraction of the cost. As usual I will be focusing on the HYPER-V and how combine with clustering can help the users to get the maximum benefits out for virtualization and high availability. HYPER-V been Microsoft flagship technology for the virtualization. It’s a 100% bare metal hyper visor technology. There are lot of misguided conception on HYPE-V is not a true hypervisor, the main argument point highlighted is you need to have windows 2008 to run the HYPER-V. This is wrong!!! You can setup on the HYPER-V hyper visor software in bare metal server and setup the virtual pc’s. HYPER-V only free version can be download from here. Comparisons on HYPER-V can be found over here.

So now we have somewhat idea about the clustering technology so how can it applied to the HYPER-V environment and have a high available virtual environment? We’ll have a look at a recommended setup for this scenario,

According to the picture we’ll need 2 physical servers. We’ll call them Host1 and Host2. Each host must 64bit and have Vitalization supported processor. Apart from that Microsoft recommended to have certified hardware by MS. so base on my knowledge I would say ideal environment should be as follows,

1. Branded servers with Intel Xeon Quad core processor. (better 2 have 2 sockets M/B for future expansion.)

2. 8 GB memory and minimum 3 nics. always better to have additional nics.

3. 2*76 GB SAS or SATA HDD for the Host operating system.

4. SAN Storage. (Just hold there folks, there are easy way to solve this expensive matter….:)

Now the above system has the full capability to handle decent amount workload. Now the configuration part :) I’ll try to summarize the steps along with additional tips when necessary,

1. Install windows 2008 Enterprise or Datacenter edition to each Host computer. Make sure both of them get the latest updates and both host will have same updates for all the software.

2. Go ahead and install the HYPER-v role.

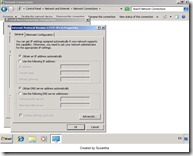

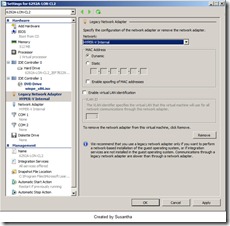

3. Configure the NIC’s accordingly. taking one host as the example NIC configuration will be as follows,

a) One NIC will be connected to your production environment. So you can add the IP, DG, SB and DNS

b) Second NIC will be the heartbeat connection between the 2 host servers. So add IP address and the SB only. Make sure it will be totally different IP class.

c) Third NIC will be configured to communicate with the SAN storage. I'm assuming we’ll be using iSCSI over IP.

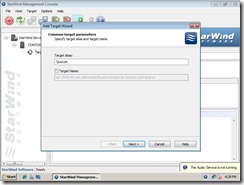

4. Now for the SAN storage you can go ahead and buy the expensive SAN storage for HP, DELL or EMC (no offence with me guys :) ) but their are customers who can’t afford that price tag. For them the good new is you can convert your existing servers into a SAN storage. We’re talking about converting you're existing x86 systems into Software based SAN storage which use iSCSI protocol. There are third party companies which provide software for this. Personally I prefer StarWind iSCSI software.

So all you have to do is add enough HDD space to your server and then using the third party iSCSI software convert your system to SAN storage. This will be the central storage for the two HYPER-V enabled host computers.

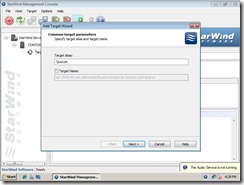

4. Go ahead and create the necessary storage at the SAN server. How to create the cluster quorum disk and other disk storage will be available from the relevant storage vendor documentation. When it comes to quorum disk try to make it 512MB if possible but most SAN storage won’t allow you to create a LUN below 1024 MB so in that case act accordingly. (Anyway here goes few steps how to create relevant disks under StarWind)

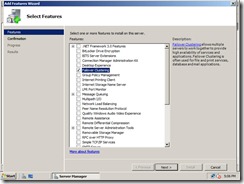

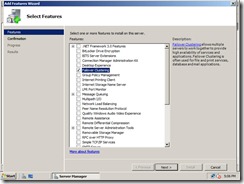

5. Go to one host computer and then add the Clustering feature.

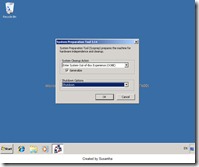

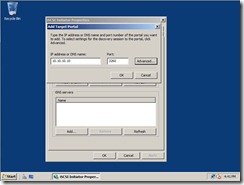

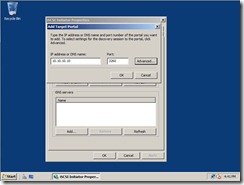

6. Go to the iSCSI initiator in the Host1 and then connect to the SAN storage. As seen on the picture click add portal and enter the IP address of SAN storage. One connected it’ll show the relevant disk mappings. (That easy in Windows 2008 R2 now)

7. Once that complete go to Disk management and unitize the disk and format them and assign drive letters accordingly. (Eg: Drive letter Q for Quorum disk…etc)

8. Go to Host2 open iSCSI imitator and add the SAN storage. Go to Disk management and add the same drive letters to the disks as configured on Host1.

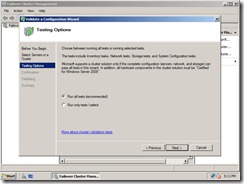

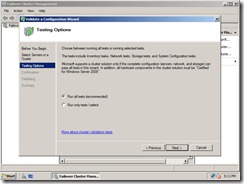

9. Go to cluster configuration and start setting up the cluster. One cool thing about Windows 2008 cluster setup is cluster validation wizard. It will do a serious of configuration checkup to make sure if you have configured the cluster setup steps correctly. This wizard is a must and you need to keep this report safely in case if you need to get Microsoft support or a technical personas support. One the cluster validation completed we can go ahead add the cluster role. In this case we’ll be selecting File Server as our cluster role.

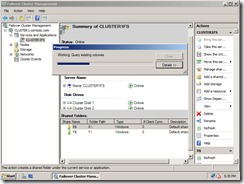

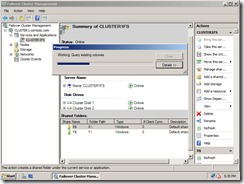

10. Once the cluster validation is completed, go ahead and create a cluster service. In this demonstration I’ll use clustered file server feature.

Go ahead and give a cluster administration name for the cluster, and after that select a disk for the shared storage. for this we’ll use a disk created in the SAN storage,

11. Once that step is completed you’ll be back in the cluster management console. Now you’ll be able to see the cluster server name you’re created. So we have created cluster but still we didn’t share any storage. Now we’ll go ahead and create shared folder an assign few files so users can see them,

Now once we login from a client PC we can type the UNC path and access the shared data in the clustered file server :)

Phew…!! that was a long article I' have every written :) Ok I guess by now you’ll have the idea Windows 2008 clustering is not very complicated if you have the right tools and the resources. Now that is the out layer internally to secure the environment we’ll need to consider about either CHAP authentications, IPSec…etc. Since this is 101 article i kept everything is simple manner.

Let me know your comments (good or bad)about the article so I’ll be able to provide better information which will be helpful for you all.